Working with AI feels like a black box. Having the AI show its work helps the user understand its logic, intervene when necessary, or even learn from its approach.

Showing your steps is a human pattern that builds trust. Let's say you managed somebody and had them take on a task that they hadn't performed before. You might ask them to tell you their strategy to address it, and check in with you, because they turned around to execute on that plan. Trust works in both ways, though. If that person was feeling lost, you could coach them by showing how you would approach them, and then empower them to put that plan into play.

Both of these scenarios show up in apps using this pattern today.

Show my work

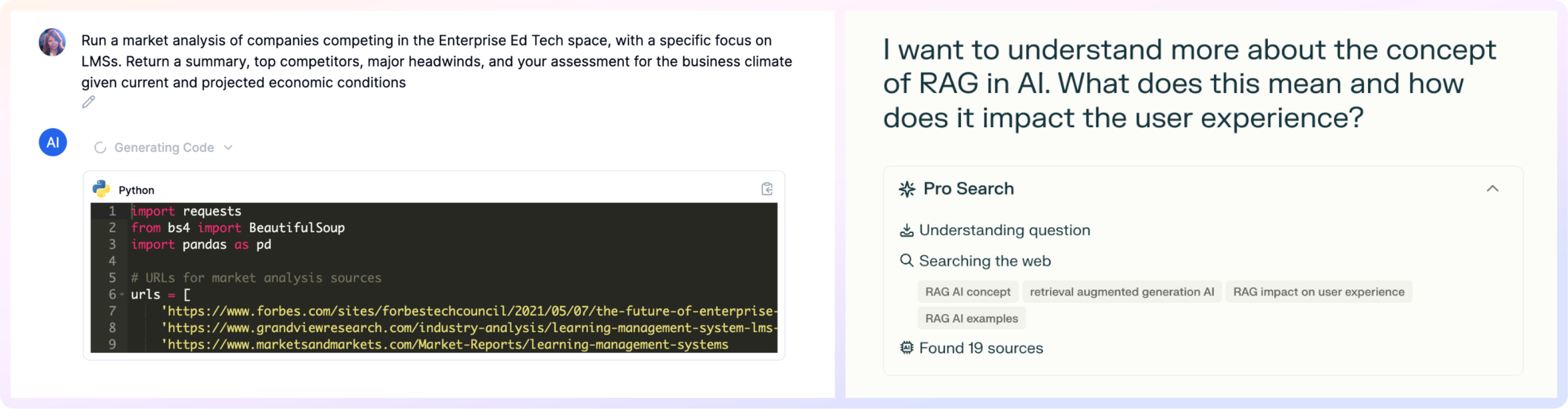

RAG apps like Julius.ai and Perplexity transparently show the steps they took to reach a response. The AI does not have the user confirm before they proceed, but this does offer a moment for the user to stop the generation with controls and re-orient the AI. In these scenarios, the AI is providing footprints that the user can use later to understand how the AI reached the logic of its response, and tune their input more appropriately if needed.

Check my work

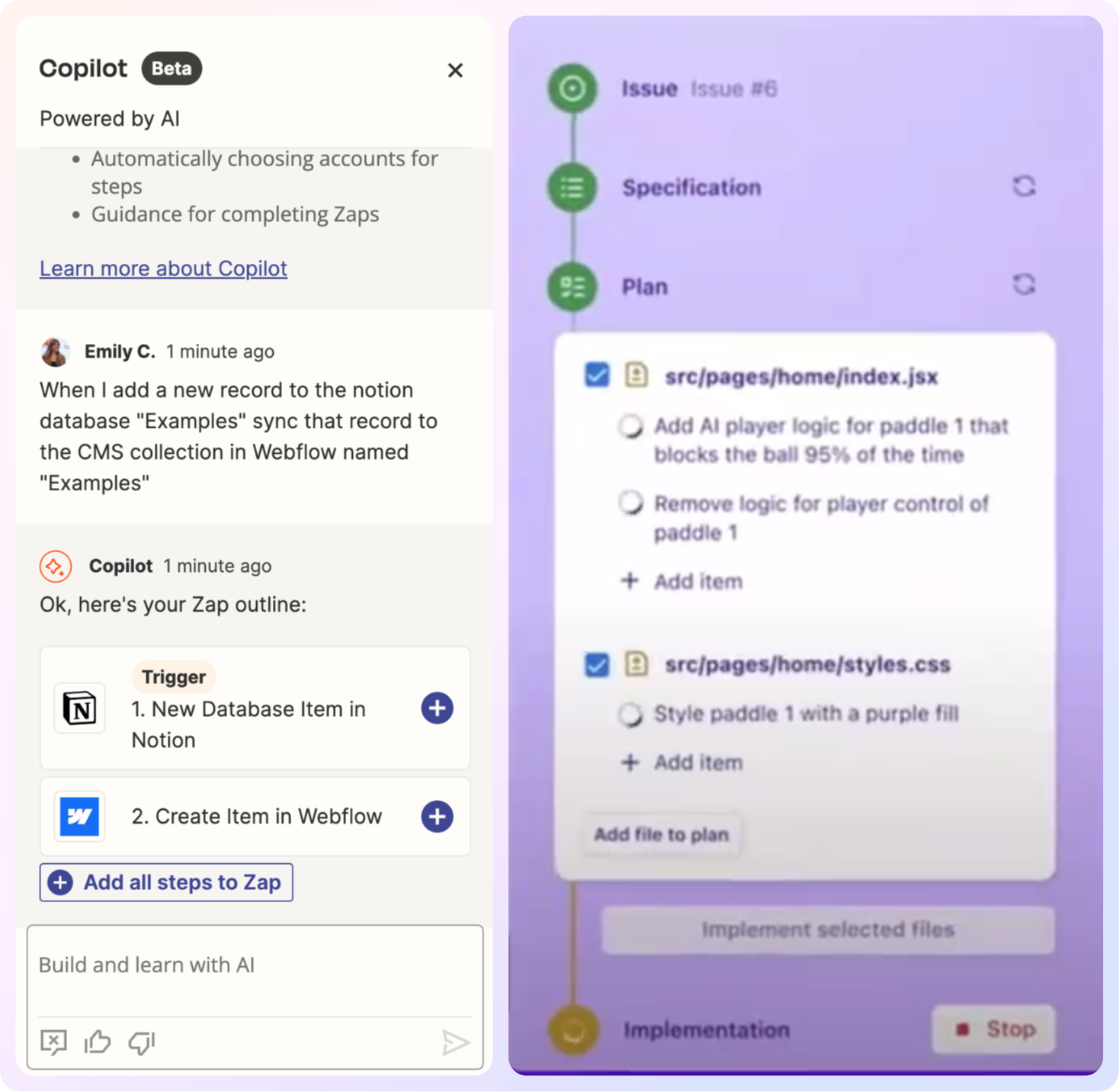

For more complicated tasks, particularly those assigned to a copilot, the user is given an opportunity to approve the AI's approach before putting it into play. Github Copilot shows all of the files it will touch and impact, decreasing the risk for a mistake. Zapier provides a similar level of transparency for AI-driven zaps.

For more regularly repeated assignments, consider using a workflow instead, so the AI can move quickly in the background knowing that the user has already approved their route.