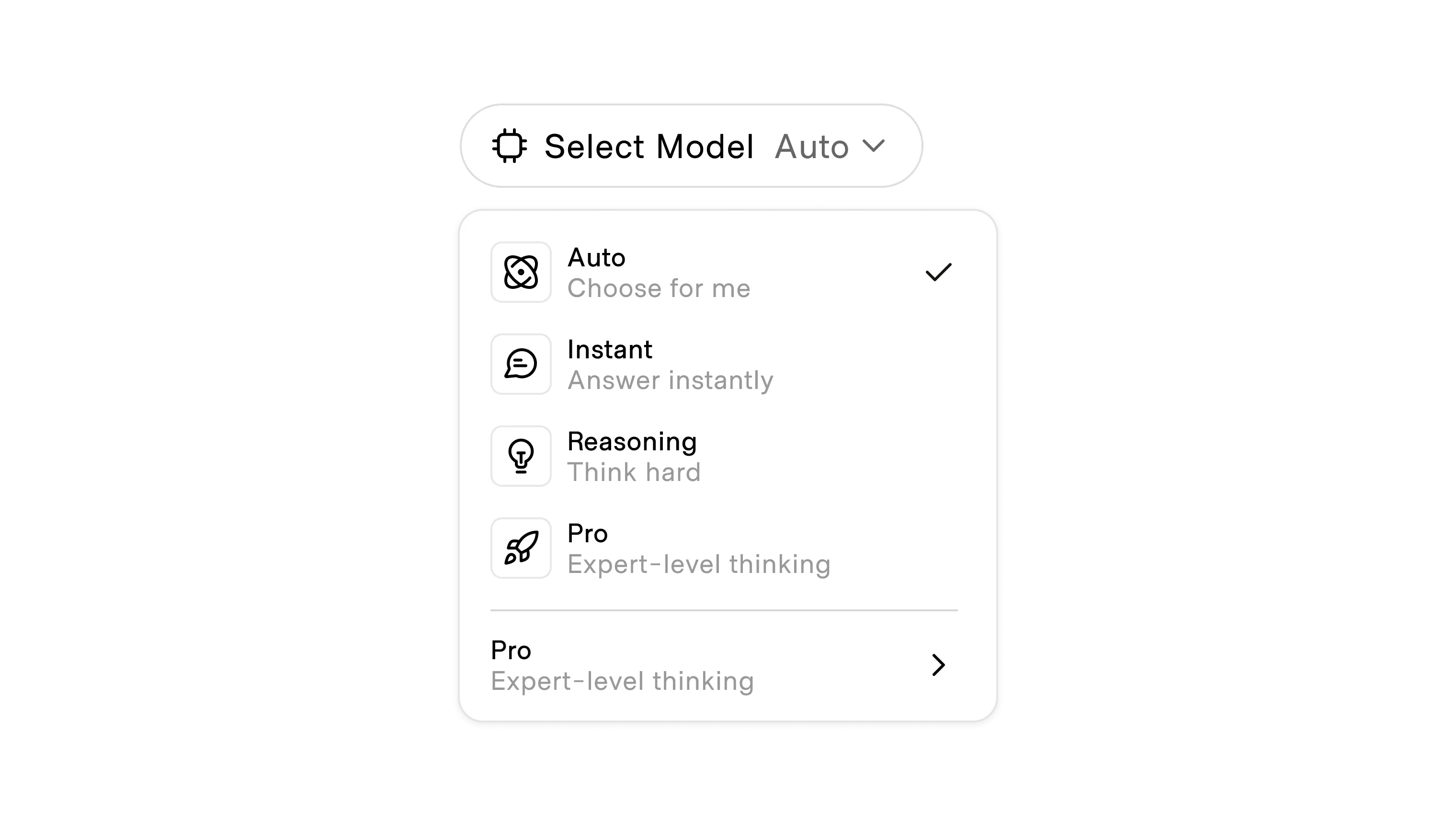

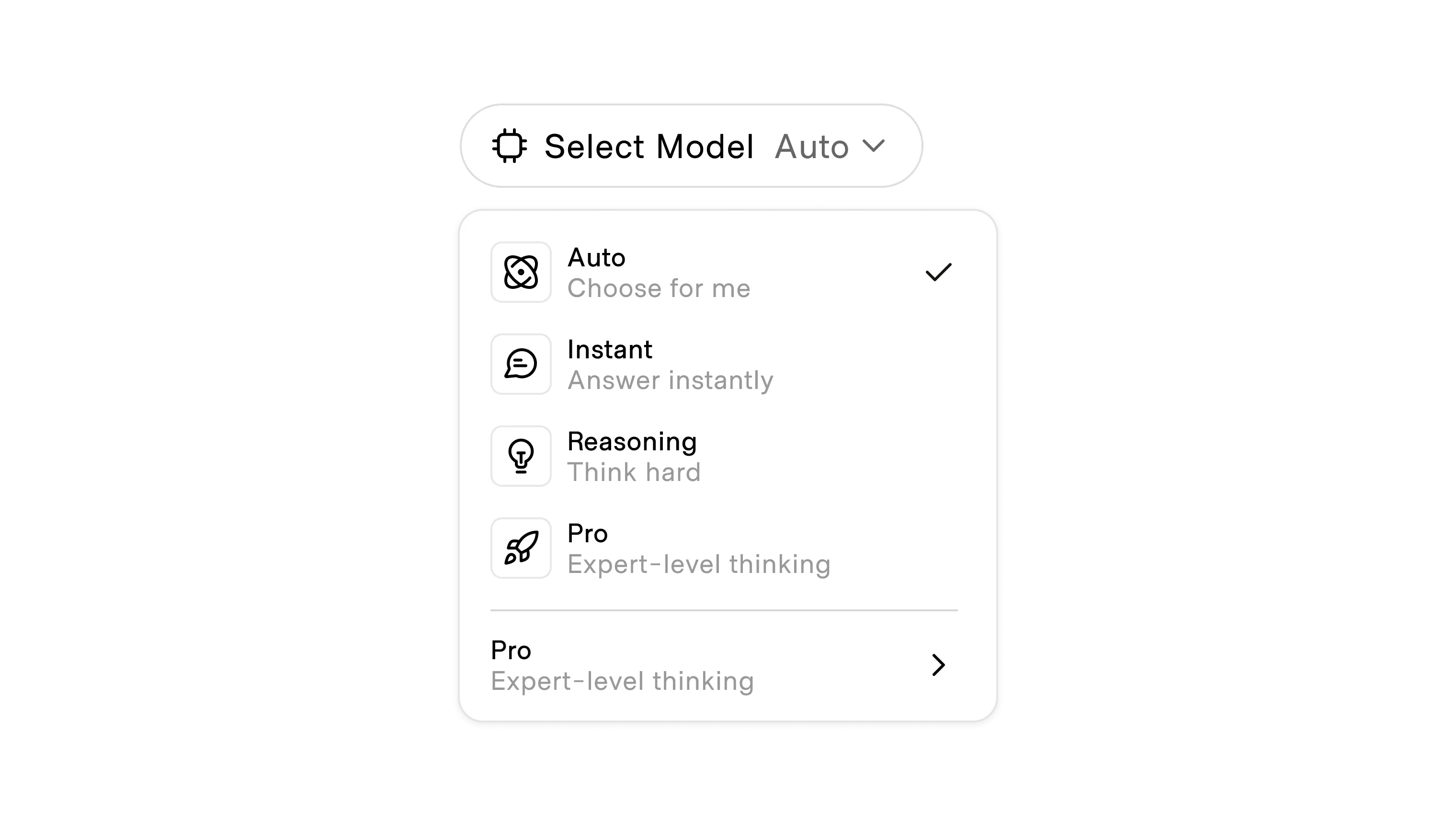

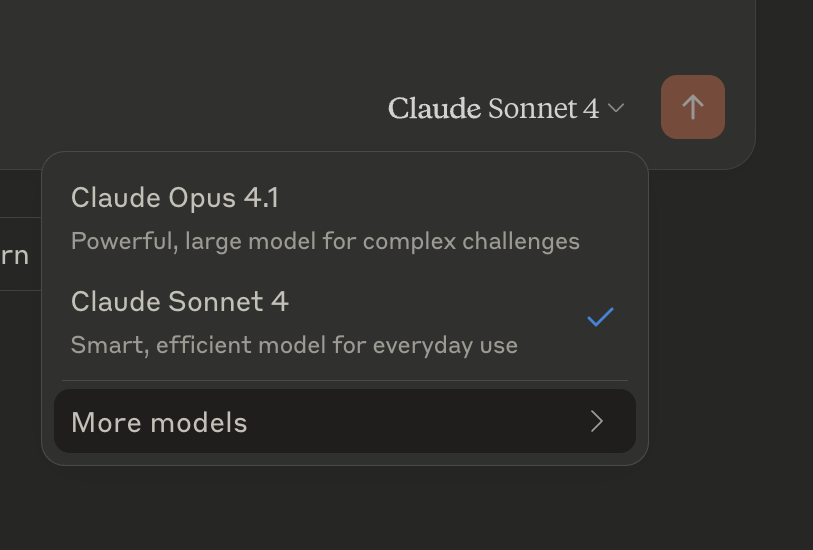

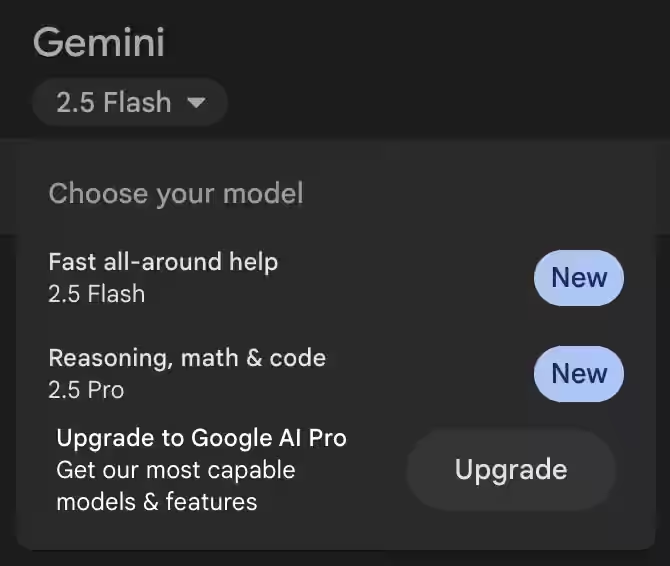

There are many reasons why a user may want to switch the model they are using for generation. Different models come with different capabilities and tradeoffs. Giving users the ability to change their model on the fly, or at least know which one the AI is using at any given time, has become a standardized pattern across AI products.

Why users might switch models

- Accuracy and reliability: Some models are more prone to hallucinations or errors depending on their training data and foundational prompts.

- Recency of knowledge: Newer models often contain more recent references and larger training sets, which can lead to more relevant outputs.

- Cost considerations: Advanced models typically carry higher token or subscription costs. Users may prototype prompts on cheaper models before scaling them on premium ones.

- Aesthetic differences: In image generation, different models carry distinct styles. Users may choose a specific model for its “look,” much like preferring vinyl for its character even if digital audio is technically higher fidelity.

- Remixing across models: Some tools allow users to generate in one model for its style and then refine or re-render in another for structure or predictability.

- Security concerns: Users may avoid certain models when handling sensitive or proprietary data depending on how the provider manages training, storage, and compliance.

- Research and comparison: Engineers, analysts, and researchers often run the same task across multiple models to benchmark performance.

Model management is no longer optional. As models proliferate and differentiate, users expect the ability to select, compare, and control which system is powering their results.

Model tiers

Model choice is often structured into tiers, each with its own tradeoffs:

- Free models: Typically smaller, cheaper, and more limited. Useful for casual exploration, onboarding, or testing prompts before scaling.

- Pro models: Larger, more up-to-date, and more capable. These are often gated behind subscriptions or pay-per-use pricing.

- Enterprise models: Scoped for compliance, security, and governance. Organizations may lock users into enterprise models to enforce retention, privacy, or regional restrictions.

- Domain-specialized models: Built or fine-tuned for specific tasks like coding, medical, legal, creative domains. These provide focused strengths but may sacrifice general-purpose ability.

The tier system creates a predictable progression for users, but it also shapes how they perceive value. A free or lighter model may be “good enough,” saving money and compute, while a more powerful option can unlock higher quality if the product makes that difference visible.