Inpainting gives users the ability to let AI adjust parts of a piece of content without regenerating or impacting the whole. This makes collaboration with AI more predictable and controllable, reduces rework, and speeds iteration.

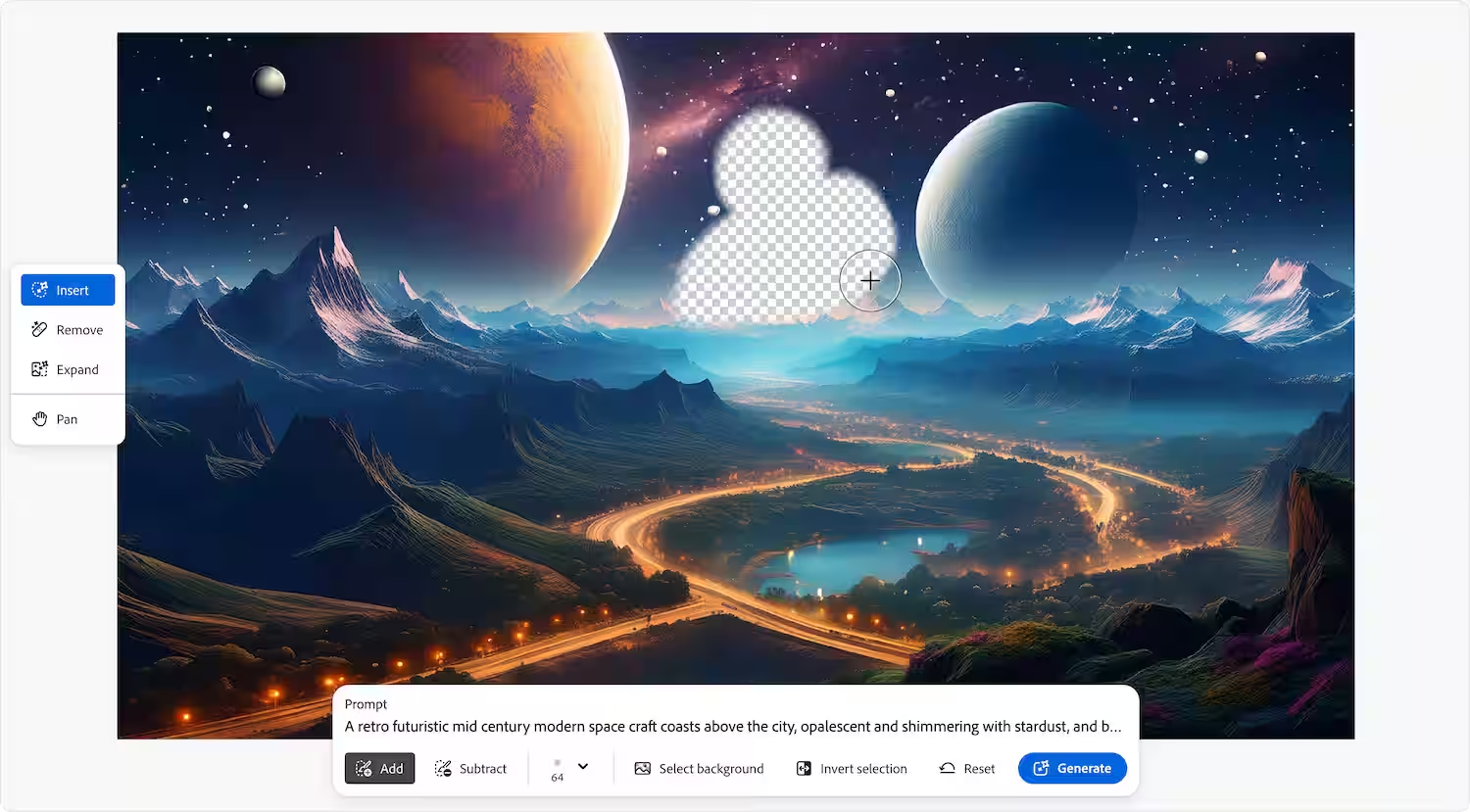

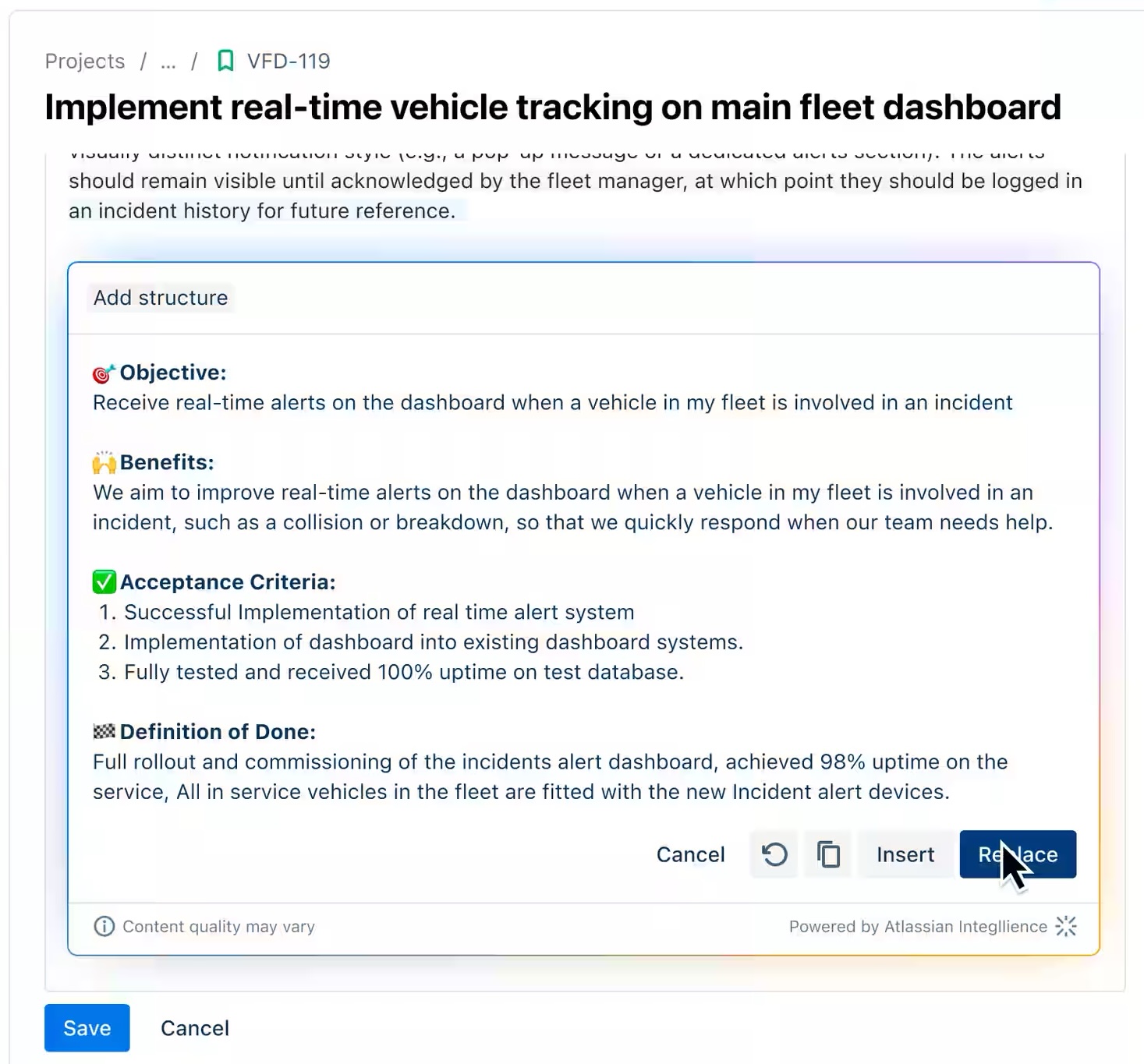

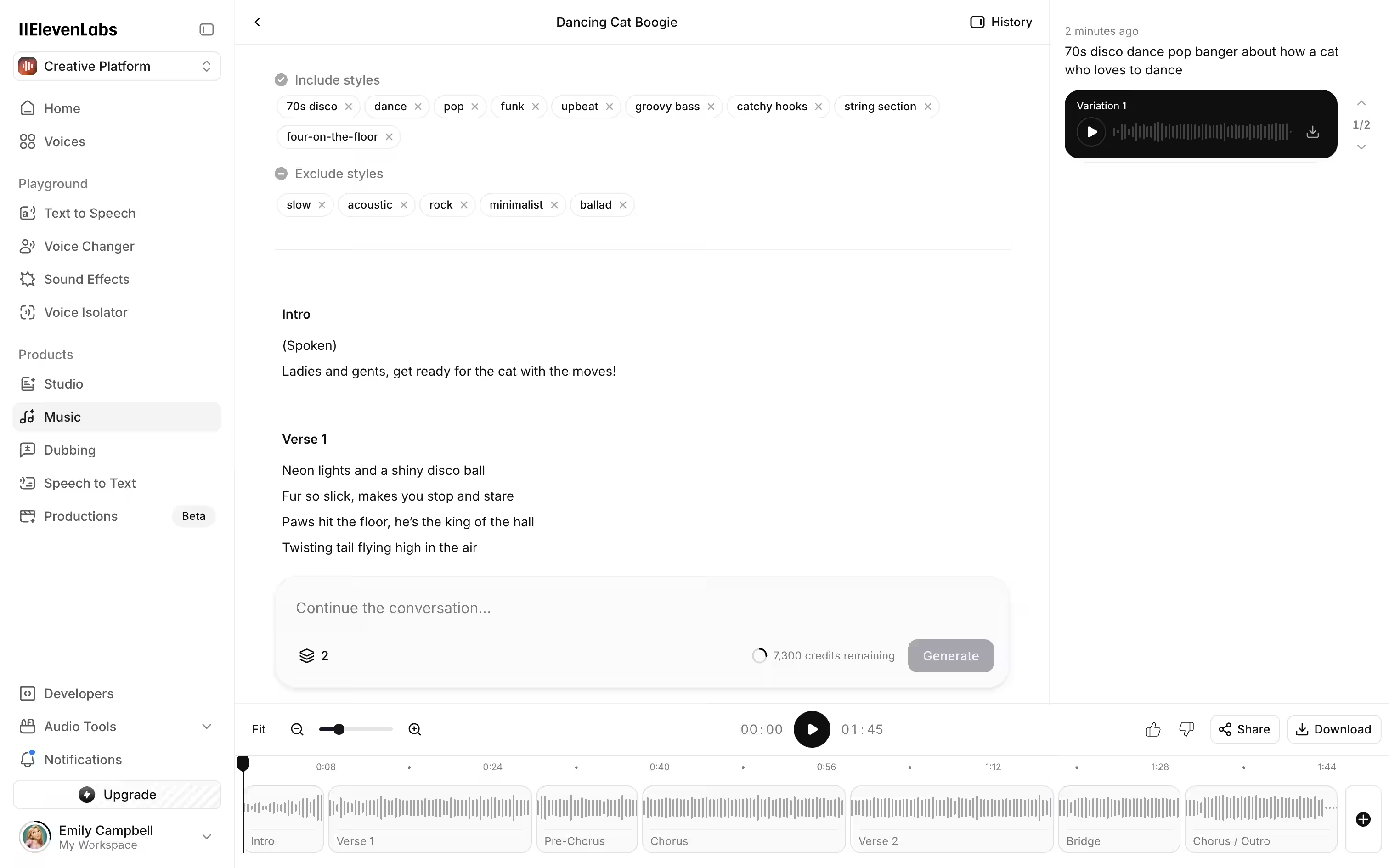

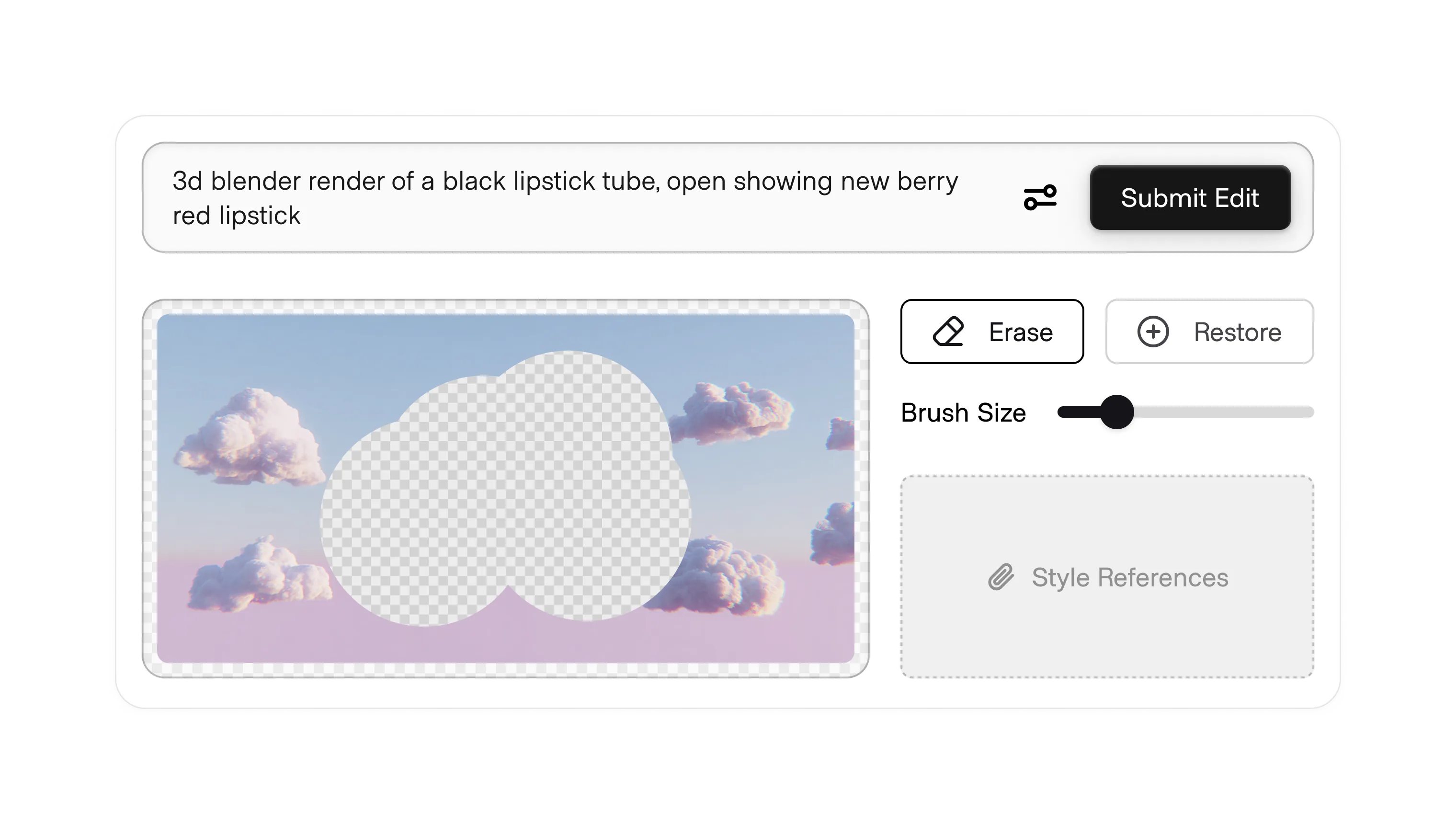

This pattern applies across all types of content, and can be used for interacting with existing content or remixing AI-generated content during a session.

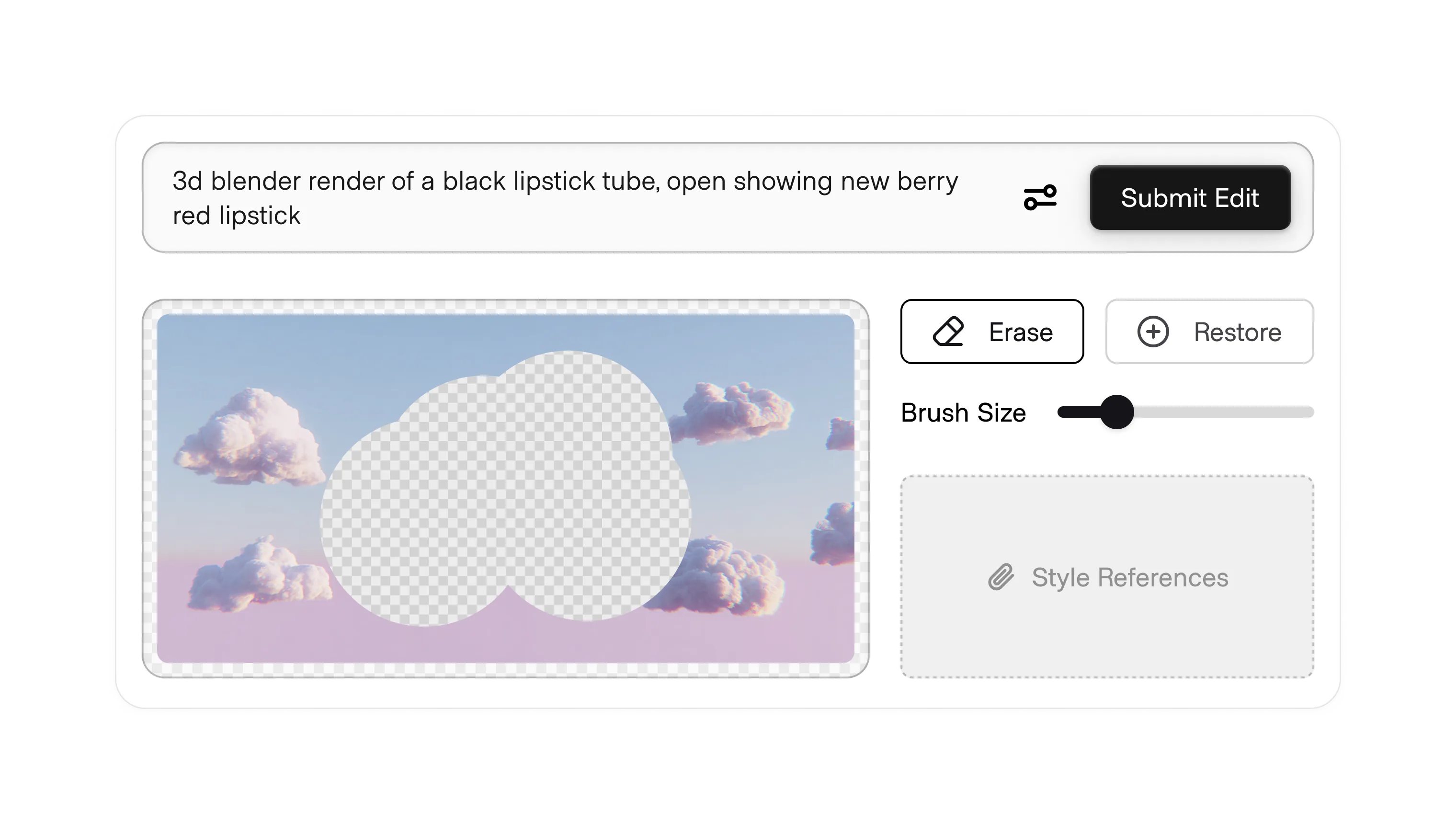

For example,

The discoverability and usability of this pattern depends on part on the existing paradigms of the underlying medium.

Image editors already come with an eraser brush to make spot edits, and associating the erased area with options to prompt for what should replace it may feel familiar.

Text editors don't have a similar paradigm of erasing content in situ to a highlight, so inline actions are used as an affordance to take action on the highlighted text.

In any case, you can maintain user agency over their experience by allowing them to verify generations before overwriting the original, making undo easy, and giving people the option to replace the original or add the new generation into the text inline.